DASS™: Infinite Scale Highly Available Software Defined Storage

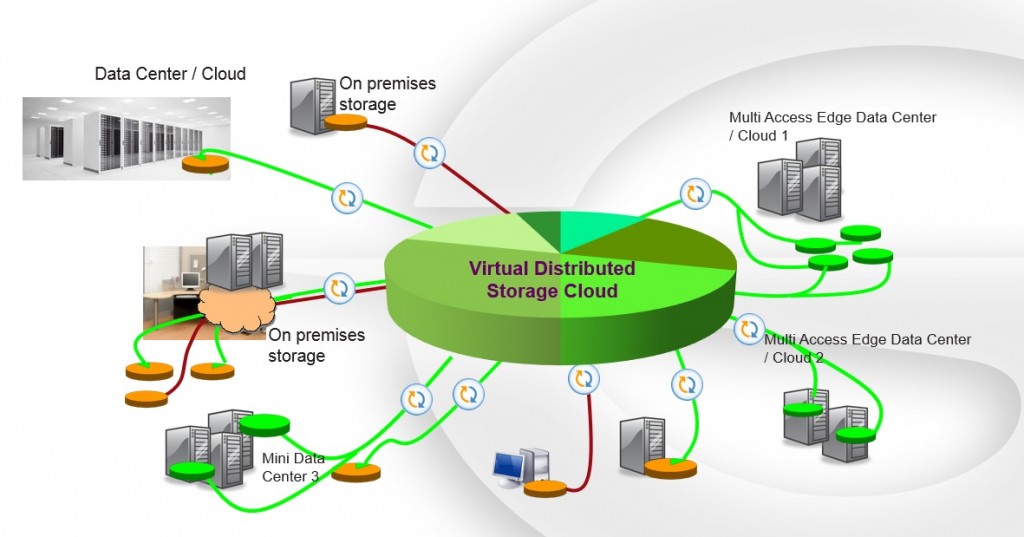

We are developing deep storage technology for the next generation of hybrid and multi cloud architectures.

DASS technology enables a wide variety of cloud, edge and hybrid storage applications in industries such as enterprise storage, 5G, IoT and consumer backup.

Enabling technology for cloud storage solutions:

- Object Storage

- Backup & Recovery

- Hybrid Cloud

- Multi site storage and data transfer protocol

- Virtualized infrastructure at the Edge / MEC

- Software-defined scale-out NAS

- CDN/caching

- DR/DRaaS: Disaster Recovery as a Service

- Consumer and SMB online backup - "The AirBnB of Storage"

The core of the solution is a robust Error Correction (FEC) based pro-active distributed storage algorithm, coupled with fast and extremely robust UDP based data restoration and delivery protocol that can restore data from multiple sources simultaneously with very low latency.

Distributed Adaptive Storage & Streaming™ (DASS) – A robust distributed and secure cloud technology, leveraging the universal presence of existing or new storage nodes and infinite fragments of storage and bandwidth resources they can provide, together with sophisticated distributed data storage and security algorithms.

Technology:

FEC Based Encoding

Redundancy and High Availability are integrated into the data itself, eliminating reliance on replication of data on the network.

5 layers of security for data protection and access restriction

AES256, FEC obfuscation, encryption on remote storage node with client key, each processing layer validated by hashes of sha256.

Pro-Active Storage™

Patented technology that pro-actively pre-populates encoded and resilient data fragments in any storage node - on prem, in the cloud, or hybrid, and enables high availability of content from any storage node or devices.

Automatic Data Healing

Provisioning of data availability by self-healing of data across the network. If any storage node might go offline, DASS healing automatically monitors the network and creates more sources and data inventory, on the fly, for every required fragment of data.

Asynchronous Coded Multi-Source Streaming

Patented UDP based data transfer and streaming protocol, providing "Netflix like" video and gaming streaming capabilities, which can obtain data in random order from dozens of sources simultaneously and assemble media on-the-fly in asynchronous order.

Cross-Platform and Low Footprint

Interoperability and operation on any OS and device. Low CPU, RAM and disk usage, can be implemented on high end servers or on low end IoT end points.

Network Operator Friendly

Off-peak load balancing leverages excess & idle bandwidth, work load & geo-optimized data retrieval.

Management, Control and Reporting

Enabling a real-time view of network performance as well as aggregate statistics on data storage and restoration behavior and KPI, network efficiency, content distribution, cost effectiveness and more.

Intellectual Property:

Giraffic DASS tech is recognized by the industry as deep IP in the Storage space (Our patents cited by IBM, Amazon, Google, AT&T, Dropbox, Verizon, SAP)

Adaptive Video Acceleration (AVA™)

All Rights Reserved (C) Giraffic Inc. 2023